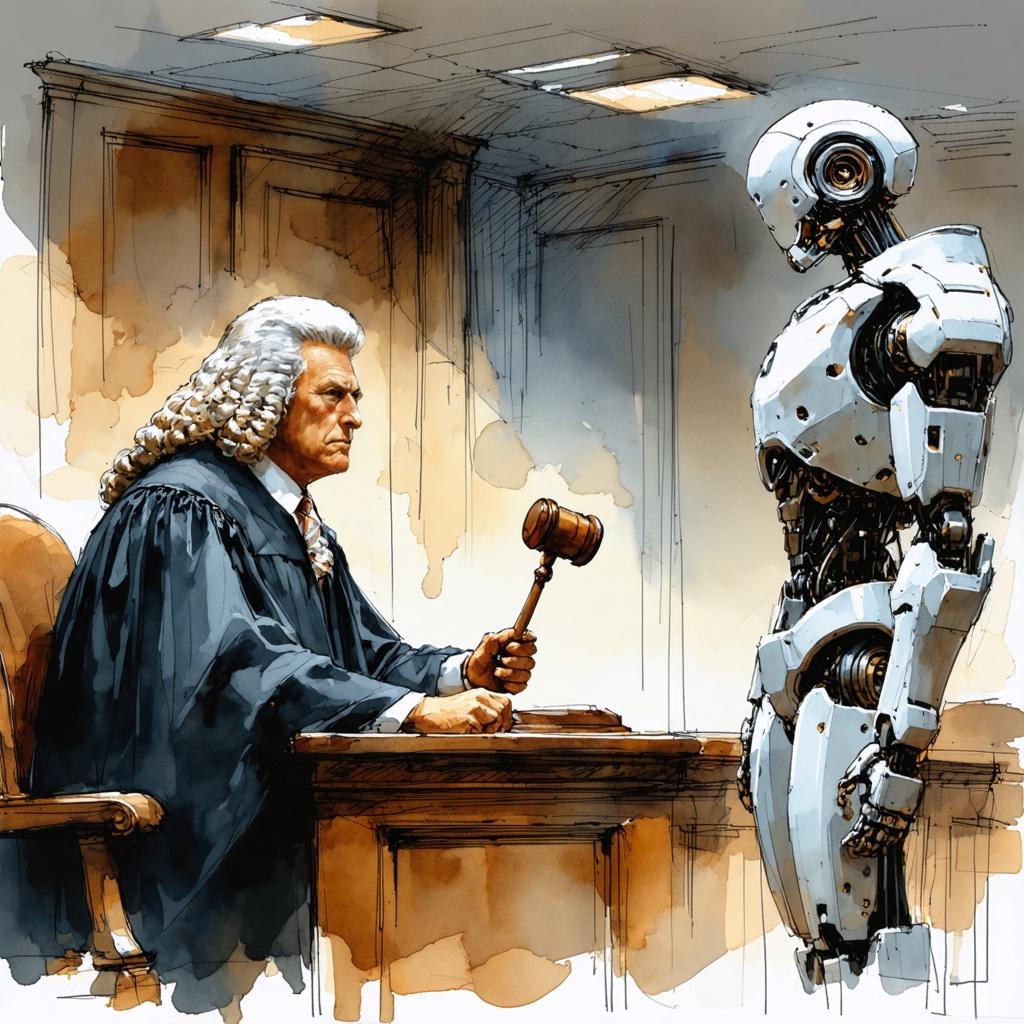

When AI systems produce things like artwork, text or software, it’s not always clear who, if anyone, owns these creations.

Contemporary laws around intellectual property were built with human authors in mind, which creates a gap when it comes to AI-generated content.

This legal ambiguity makes it harder to figure out who holds authorship, ownership, or even copyright over what AI produces. It brings challenges for businesses, creators, and developers using AI.

As technology races ahead of regulations, it’s clear we need proper legal frameworks for AI content. Ownership definition will be crucial to encourage innovation while securing fair protections for everyone involved.

The Current Legal Framework

The existing legal systems struggle with establishing ownership of AI-produced content.

In the U.S., copyright laws only protect works made by humans, or those with substantial human intervention. This leaves many AI-generated pieces without coverage. The European Union also emphasize human authorship for copyright eligibility.

In the United Kingdom there are limited rights for computer-generated works. Copyright is granted to the person responsible for the work’s creation arrangements.

This patchwork of rules makes it tricky for businesses and creators to operate. To add to the confusion, some AI providers set their own licensing terms. These terms may influence what rights users actually get over the AI’s outputs, further muddying ownership matters.

Who (If Anyone) Owns AI-Generated Content?

Current intellectual property laws don’t seem to fit well with how AI works. There are ongoing debates about whether ownership rests with the organizations creating these systems, the individuals providing the input, or no one at all, potentially leaving this type of content unprotected. Each standpoint involves its own legal, ethical, and practical challenges.

1. The developer or company that created the AI

One argument is that the developers or companies behind these AI systems should own the output.

They are responsible for the construction of the systems, the creation of the algorithms, and the development of the training models.

Ownership is often tied to contracts and terms of use. For example:

- OpenAI lets users own the output they generate but keeps the right to use it for research or compliance reasons.

- MidJourney grants different ownership terms based on subscription levels and limits rights for free-tier users.

These contracts determine how much control developers retain or pass on to users, meaning the rules around ownership can vary.

Developers might also claim indirect authorship, as their effort to select datasets and design model architecture determines how the AI generates content.

2. The user who inputs prompts and guides the AI

Another perspective suggests that ownership should belong to users because they craft prompts and guide the AI toward specific outcomes. Whether they’re creating software, writing copy, or designing art, users choose input, refine responses, and ensure results match their objectives,

In this sense, they take on the role of collaborators and add intentionality and creativity to the process.

However, the legal system is unclear on this. Copyright laws usually recognize authorship when something is original and involves human creativity. When AI production is seen as largely mechanical, user claims may not be strong enough legally.

Things get even murkier when people considerably edit or transform AI-generated content. This mixing of human and machine efforts makes it harder to decide where control ends and authorship begins.

3. No one, AI-generated works might be public domain

Some believe that AI-generated content cannot be owned at all and suggest it belongs in the public domain. For countries where copyright law requires human authorship, AI content is commonly seen as ineligible for ownership. This approach sidesteps concerns about granting exclusive rights to work created without active human involvement.

But if something isn’t owned, it’s easier for others to misuse or plagiarize it. It could also lead to commercialization by individuals or organizations unrelated to the original users or creators. Sectors depending on intellectual property protections, like creative industries, might reduce innovation efforts if they can’t secure exclusive rights over AI-generated works.

Until laws clarify these issues, businesses and individuals will need to adapt their strategies to make the most of, and protect, AI-driven outputs.

AI and Copyright Challenges

Legal uncertainty can lead to risks. Outputs generated by AI might unintentionally resemble existing copyrighted works, which could open the door to potential infringement claims.

Another issue is that AI systems are trained using data that may include copyrighted material, raising concerns over derivative rights. Without well-defined laws, businesses might find it difficult to enforce exclusive rights or avoid liability.

This lack of clarity also impacts industries like entertainment and software development, where clear copyright structures are essential for earning revenue on creative works.

Without recognition for AI-generated content, companies face difficulties both in protecting new works and in handling risks of misappropriation. These issues highlight the urgent need for copyright laws to keep pace with AI advancements.

The gray area of human-AI collaboration: What if an artist edits AI-generated content?

As we have already hinted at, when human creators modify AI-generated content, questions about copyright become even more complicated.

Editing images, rewriting text, or restructuring code involves creative input that might shift AI outputs into a new category. The challenge lies in determining whether such changes result in original works eligible for independent protection or if they remain derivative works without that possibility.

Courts haven’t reached agreement on what qualifies as sufficient human involvement. Minor changes generally don’t meet the standard for originality unless there’s significant creative transformation.

Sometimes, even heavily edited AI-driven work still counts as partially AI-generated. Companies are advised to adopt internal policies to track and document human input in these cases.

While collaboration between humans and AI has great potential, especially with tools like Stryng, it’s hard to predict intellectual property outcomes under current legal systems.

Potential Future Regulations

Some policymakers are considering models that account for the role of human input, the developer’s responsibility, and whether the AI relied on copyrighted material.

One proposed approach is international harmonization to reduce jurisdictional inconsistencies, which could simplify enforcing intellectual property rights across borders.

Other ideas include frameworks for co-authorship, more straightforward usage permissions, and authorless copyright categories for works created by AI.

Proposals also suggest systems to balance fair compensation for creators whose material trains AI with protections for users generating new content.

Legislative changes, however, are slow, with phased implementation and consultations likely necessary.

Summary

AI-generated content is challenging intellectual property laws in ways we haven’t seen before. Legal systems still lack clear answers about ownership. There are many questions about the rights of developers, users, and how much of this content belongs in the public domain.

Copyright laws in most places emphasize human creativity, which means that a lot of AI-generated work doesn’t qualify for protection. This leaves businesses, creators, and developers vulnerable to ownership disputes.

Efforts like international regulation alignment or co-authorship models could help, but progress is moving slowly. Policies need to recognize the real-world complexity of how humans and AI work together, so stakeholders can move forward with confidence and less uncertainty.